No magic - just our painstaking joint work on the site

Robots.txt Guide: Tips and Practical Examples

Working with the robots.txt file is an integral part of technical SEO optimization. At the same time, sometimes specialists forget about it or deliberately skip it.

We adapted an article by Michael McManus, “Robots.txt best practice guide + examples.”

What is a robots.txt file?

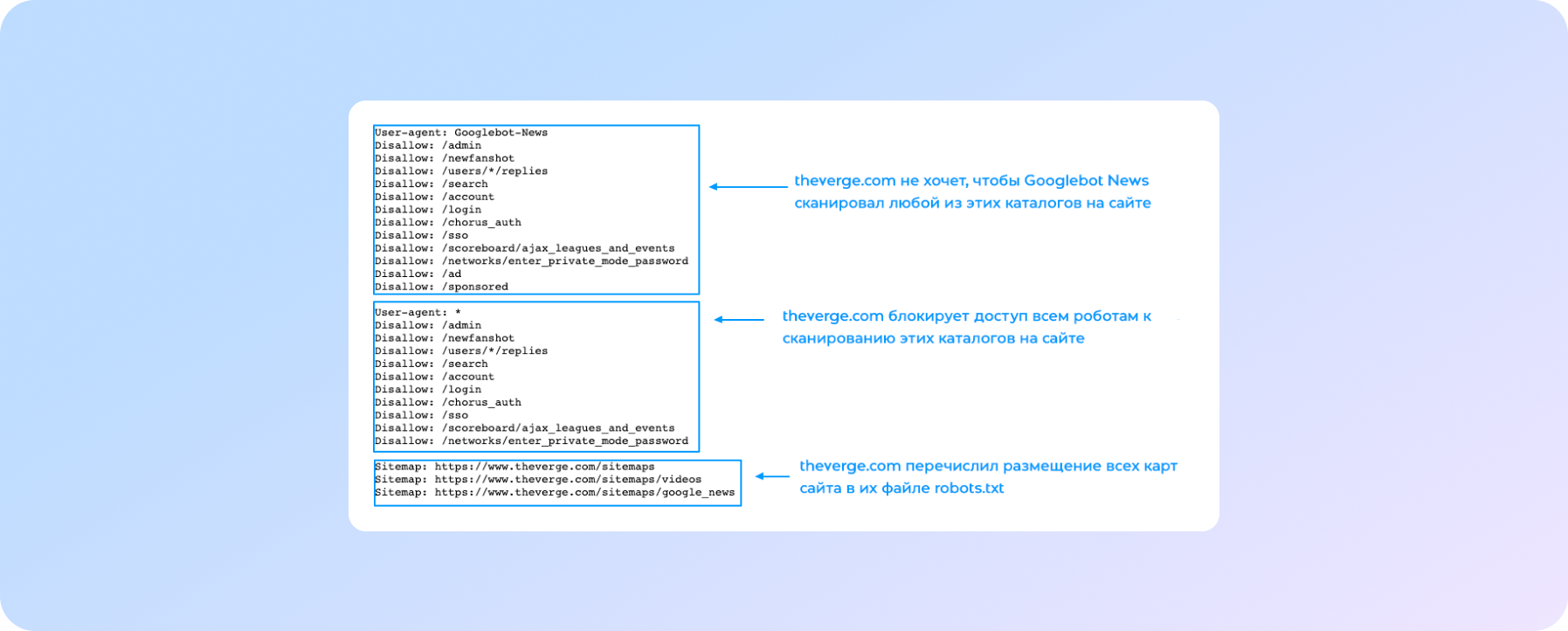

Robots.txt is a special file containing instructions for search engines, such as where is the sitemap.xml file with a list of all site pages, which pages to crawl and which not to. This allows you to increase the efficiency of using the crawling budget.

You might be wondering what a crawl budget is. This is the number of pages that Google can crawl during one robot visit to the site.

What features arise due to restrictions on the number of scanned pages? For example, if you have a website with 200 pages, then its pages will be quickly crawled and updated in the search. The problem occurs for sizeable online shopping sites with 10,000 or more pages. Due to existing restrictions, Google can crawl unimportant pages or automatically generated pages (filters, sorting, etc.) while ignoring the necessary pages that could drive traffic and lead to conversions.

Google officially stated, "Having a lot of URLs with low content quality is bad for site crawling and indexing." That is why we need to use every opportunity to close such pages from indexing.

How can we use the robots.txt file?

Before starting to crawl the site pages, Googlebot finds and scans the robots.txt file. In most cases, it will follow the directives specified in this file.

When working with the robots.txt file, you need to be extremely careful. If you do not fully understand how this or that directive works, you can accidentally prevent Googlebot from crawling the site.

With robots.txt, we can make:

- blocking access of Googlebots to the technical pages of the site (development environment),

- closing access to the pages of the internal search on the site,

- blocking access to service pages - shopping cart, logging in, registration on the site and others,

- closing from indexing images, PDF files, etc.

- indicating to the robot at which URL the XML-sitemap is located.

Universal tips for creating a robots.txt file, don’t exist because the types and number of pages that need to be closed from the index vary depending on the kind of site and CMS. For example:

- CMS WordPress generates many technical duplicates, which most often cannot be eliminated. Therefore, they need to be closed from indexing.

- For large sites of online stores, we need to close the pages of sorting, filtering, and searching for products on the site from crawling.

Examples of Robots.txt

We bring to your attention several variants of the robots.txt file with a detailed explanation of how it works.

| User-agent: * Disallow: |

Allowing all robots to crawl all content on a website |

| User-agent: Googlebot Disallow: / |

Blocking Googlebot from crawling a website. As you can see, the difference between this and the previous example is only in one symbol. Still, the result will be opposite |

| User-agent: Disallow: /thankyou.html |

Disallowing to all robots the crawling of the certain page /thankyou.html |

| User-agent: * Disallow: /cgi-bin/ Disallow: /tmp/ Disallow: /junk/ |

Disallowing to all robots from the crawling directories /cgi-bin/, /tmp/, /junk/ |

Here is one more example

You need to understand that directives to prohibit indexing in robots.txt are advisory. Therefore, you may see the Google Search Console of pages marked "Indexed, though blocked by robots. txt ". In such cases, we recommend that you additionally use the meta robots tag, specifying the noindex, and nofollow value.

You can read more detailed information about How Google interprets the Robots.txt specification(https://developers.google.com/search/docs/advanced/robots/robots_txt) from official sources. Also, you have to take into attention that the size of the robots.txt file should not be more than 500 KB.

How to create robots.txt

First, you should check if the robots.txt file exists on your website. It’s effortless. Just go to the URL www.yourdomain.com/robots.txt. In this example, instead of www.yourdomain.com you have to put your website’s domain name. Nothing found? Let’s go to the next step.

Creating the robots.txt file is a pretty simple process. You can learn how to do it from Google article. You can also text your robots.txt file using a special Google tool. Modification of this file will not be more difficult than working with a text editor.

Take into attention that if you have subdomains, you must create robots.txt files for each of them.

Useful tips:

- Make sure that all important pages of your site are opened for crawling and law value ones are blocked;

- Pay attention to keeping open for crawling javascript and CSS files;

- Check from time to time your robots.txt file to make sure that it was not changed by accident;

- Use correct capitalization of the directories, subdirectories and files names;

- Remember that robots.txt file should be named “robots.txt” and placed in the root directory of your website;

- Do not hide private user information by robots.txt. It will not be helpful in this case;

- Put your sitemap’s location in robots.txt;

- Check one more time if you allowed the crawling of important website directories.

Conclusion

Setting up robots.txt is not as difficult as it looks from the beginning. But if you do it correctly it will be beneficial for optimizing the crawling budget. As a result:

- Search engine bots will not waste time crawling useless pages,

- All essential pages will be crawled in time and represented in SERP.

If you are interested in professional technical site audit, contact us right now.

We care about improving your sales :)